PyRun's core features are designed for serverless simplicity, scalability, and performance on your cloud infrastructure.

Run standard Python code seamlessly in the cloud. PyRun handles server management, scaling, and optimization automatically on your AWS account.

Enjoy a VS Code-like web IDE, automated runtime builds (YML/Dockerfile), and integrated configuration for tools like Lithops, simplifying setup.

Leverage first-class support for Lithops (FaaS) and Dask. Easily scale from simple scripts to massively parallel computations on AWS. (Ray, Cube coming soon!)

Gain instant insights with detailed metrics for CPU, memory, disk, network usage, and task timelines. Understand and optimize workloads effectively.

Pay only for the AWS resources you use. PyRun's automation reduces infrastructure overhead and optimizes resource utilization, saving you time and money.

Easily select, partition, and manage data from S3 or public registries using the integrated DataPlug library for streamlined parallel processing with Lithops.

PyRun provides a VS Code-like web interface with a file browser, editor, and terminal. Get started instantly with pre-configured Dask or Lithops templates.

Customize your runtime effortlessly by editing `environment.yml` or adding a `Dockerfile`. PyRun automatically detects changes and rebuilds the environment, letting you focus solely on your code.

Explore Workspaces

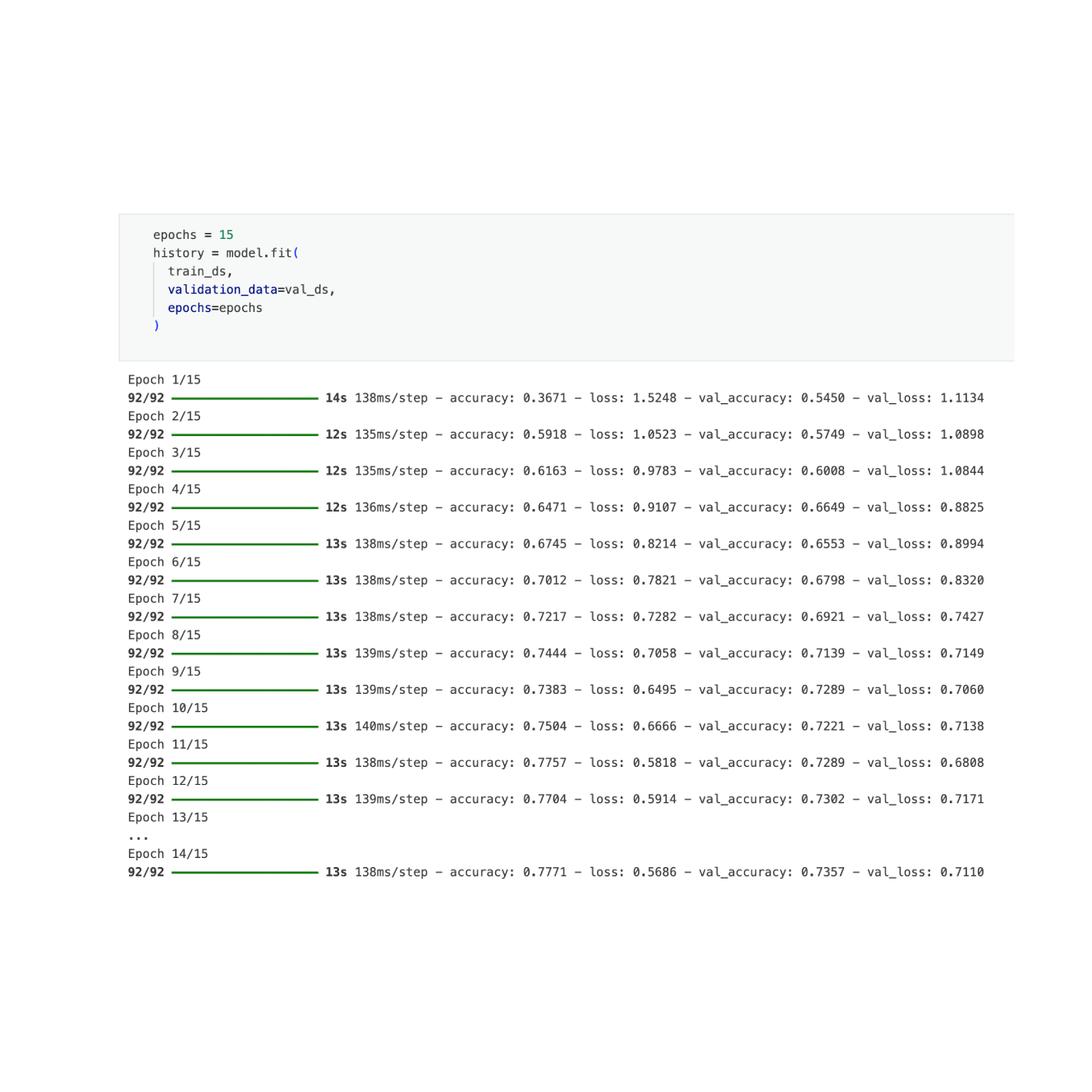

PyRun streamlines your cloud development cycle. Focus on your Python code within the integrated workspace. Define dependencies easily with `environment.yml` or a Dockerfile.

Click 'Run' to execute on your AWS account, leveraging Lithops or Dask. Monitor progress in real-time, analyze results, and iterate quickly. It's cloud computing made simple.

See the Basic WorkflowPyRun's Data Cockpit simplifies preparing data for distributed cloud jobs. Select datasets from your S3, public registries, or upload directly.

Intelligently partition your data using the integrated DataPlug library, optimize batch sizes with benchmarking, and generate metadata ready for parallel processing frameworks like Lithops. Spend less time on data prep, more on analysis.

Learn About Data Cockpit

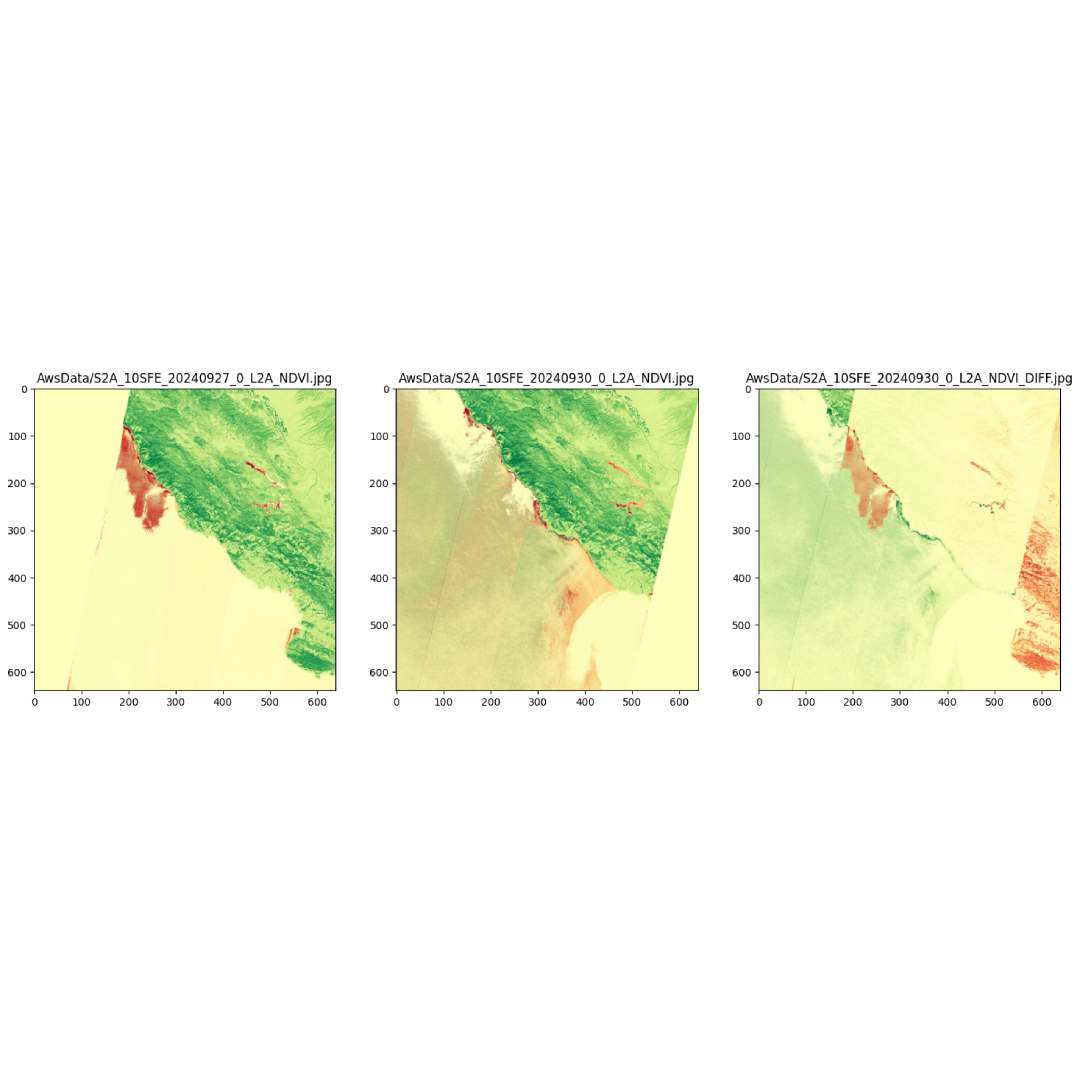

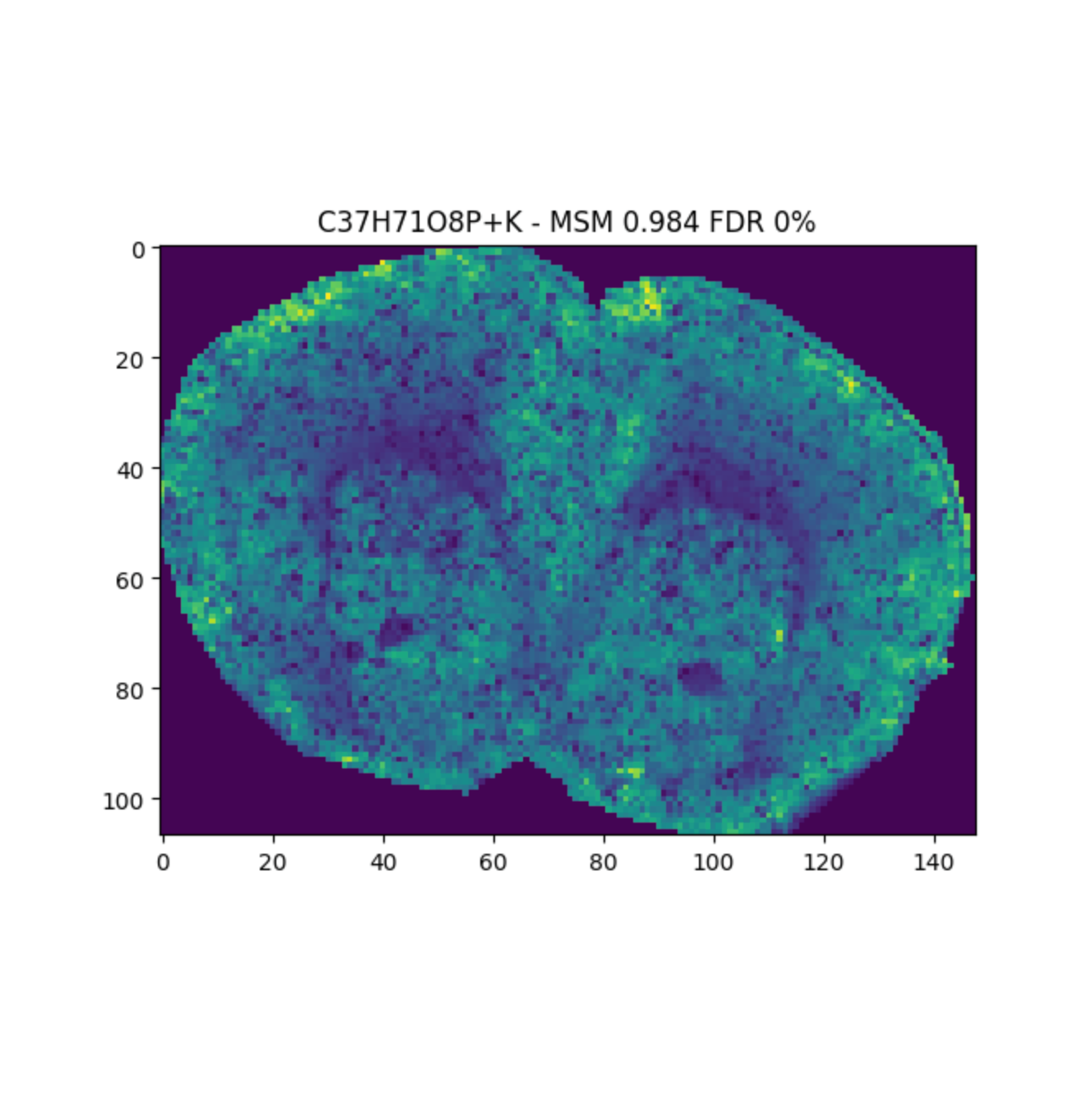

PyRun excels at handling demanding data-intensive workflows. Below are some examples of how PyRun can be applied to complex domains such as geospatial analysis, genomics, metabolomics, among others enabling serverless execution at scale.

Process and analyze large-scale geospatial datasets with serverless Python, leveraging distributed computing for rapid insights from satellite imagery, mapping data, and more.

Deploy and scale AI/ML models effortlessly. Run training jobs, inference tasks, and complex AI workflows using serverless Python for optimal resource utilization.

Streamline metabolomics data processing and analysis, handling vast datasets from mass spectrometry and other techniques using serverless Python for faster discovery.

Experience PyRun's features firsthand. Sign up and connect your AWS account in minutes to run scalable Python jobs effortlessly.

Get Started for Free